We are interested in enabling natural human-computer interaction by combining techniques from machine learning, computer vision, computer graphics, human-computer interaction and psychology. Specific areas that we focus on include: multimodal human-computer interfaces, affective computing, pen-based interfaces, sketch-based applications, intelligent user interfaces, applications of computer vision and machine learning to solving real world problems. Browse through the publications and research pages to get a flavor of IUI@Koc.

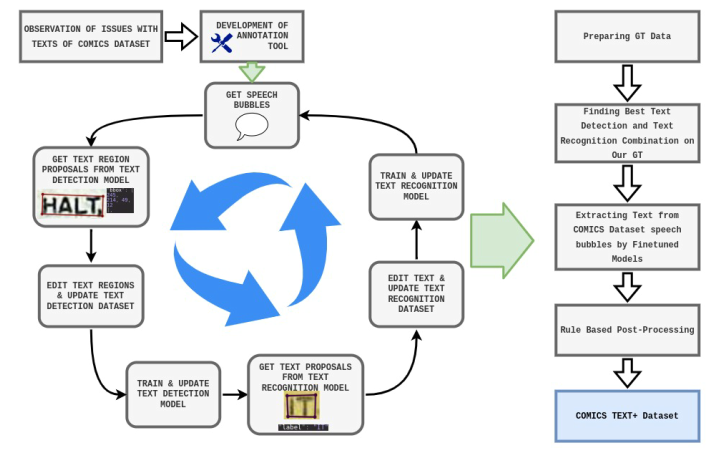

This study focuses on improving the optical character recognition (OCR) data for panels in the COMICS dataset, the largest dataset containing text and images from comic books. To do this, we developed a pipeline for OCR processing and labeling of comic books and created the first text detection and recognition

Read more

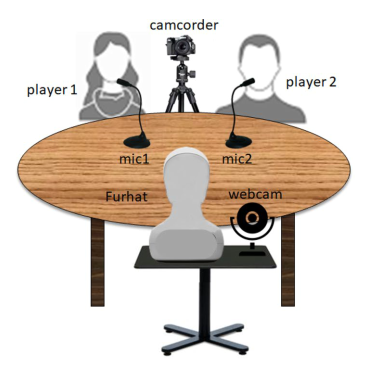

We present the engagement in human–robot interaction (eHRI) database containing natural interactions between two human participants and a robot under a story-shaping game scenario. The audio-visual recordings provided with the database are fully annotated at a 5-intensity scale for head nods and smiles, as well as with speech transcription and

Read more

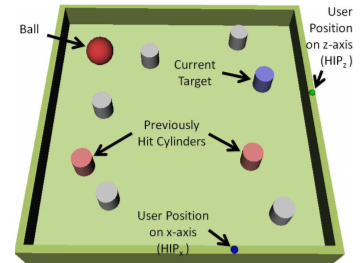

We investigate how collaborative guidance can be realized in multimodal virtual environments for dynamic tasks involving motor control. Haptic guidance in our context can be defined as any form offorce/tactile feedback that the computer generates to help a user execute a task in a faster, more accurate, and subjectively more

Read more

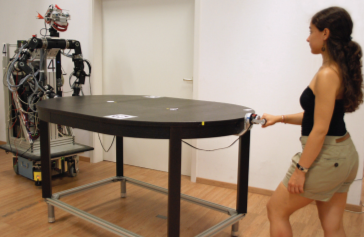

Since the strict separation of working spaces of humans and robots has experienced a softening due to recent robotics research achievements, close interaction of humans and robots comes rapidly into reach. In this context, physical human– robot interaction raises a number of questions regarding a desired intuitive robot behavior. The

Read more

We explore the effect of laughter perception and response in terms of engagement in human-robot interaction. We designed two distinct experiments in which the robot has two modes: laughter responsive and laughter non-responsive. In responsive mode, the robot detects laughter using a multimodal real-time laughter detection module and invokes laughter

Read more

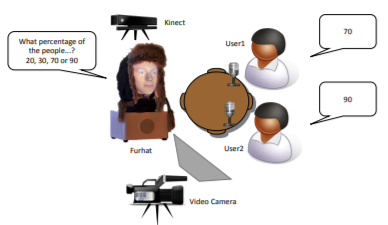

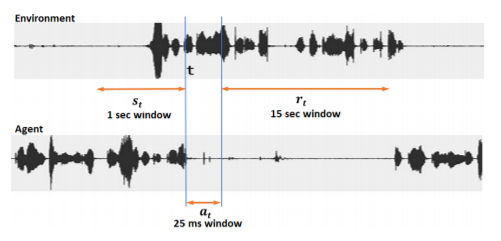

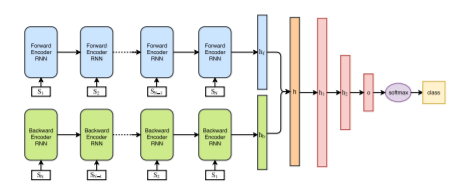

We present a novel method for training a social robot to generate backchannels during human-robot interaction. We address the problem within an off-policy reinforcement learning framework, and show how a robot may learn to produce non-verbal backchannels like laughs, when trained to maximize the engagement and attention of the user.

Read more

Hand-drawn objects usually consist of multiple semantically meaningful parts. For example, a stick figure consists of a head, a torso, and pairs of legs and arms. Efficient and accurate identification of these subparts promises to significantly improve algorithms for stylization, deformation, morphing and animation of 2D drawings. In this paper,

Read more

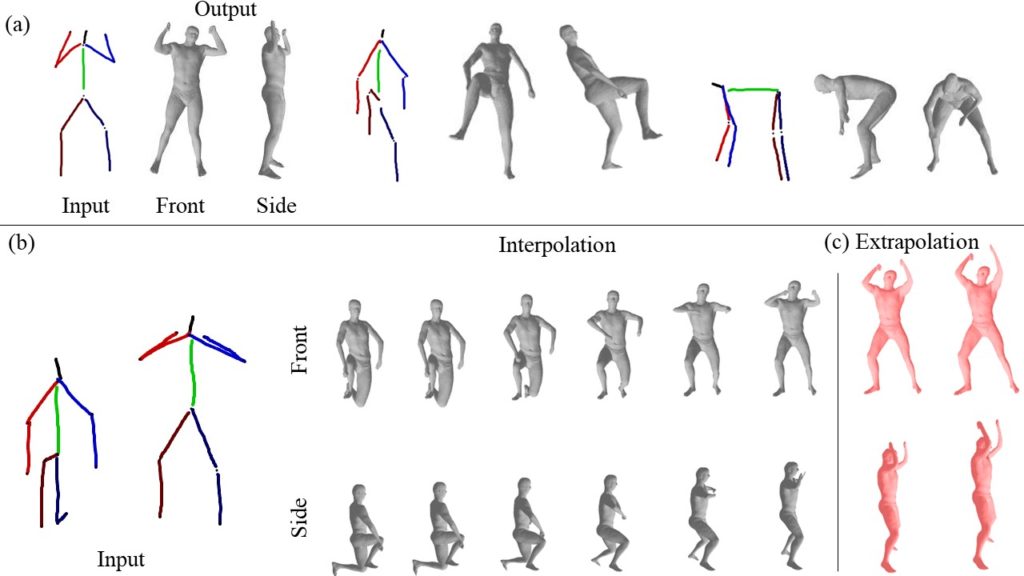

Generating 3D models from 2D images or sketches is a widely studied important problem in computer graphics.We describe the first method to generate a 3D human model from a single sketched stick figure. In contrast to the existing human modeling techniques, our method requires neither a statistical body shape model

Read more

‘Serious games’ are becoming extremely relevant to individuals who have specific needs, such as children with an Autism Spectrum Condition (ASC). Often, individuals with an ASC have difficulties in interpreting verbal and non-verbal communication cues during social interactions. The ASC-Inclusion EU-FP7 funded project aims to provide children who have an

Read more

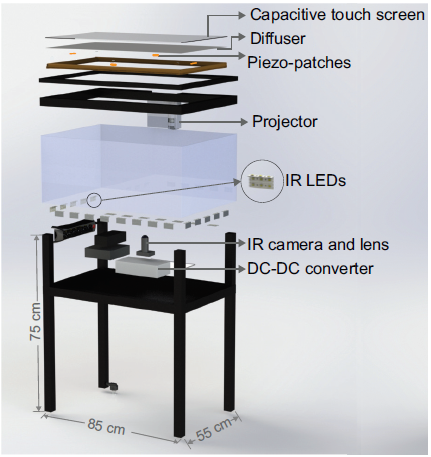

We present HapTable; a multi–modal interactive tabletop that allows users to interact with digital images and objects through natural touch gestures, and receive visual and haptic feedback accordingly. In our system, hand pose is registered by an infrared camera and hand gestures are classified using a Support Vector Machine (SVM)

Read more