We are interested in enabling natural human-computer interaction by combining techniques from machine learning, computer vision, computer graphics, human-computer interaction and psychology. Specific areas that we focus on include: multimodal human-computer interfaces, affective computing, pen-based interfaces, sketch-based applications, intelligent user interfaces, applications of computer vision and machine learning to solving real world problems. Browse through the publications and research pages to get a flavor of IUI@Koc.

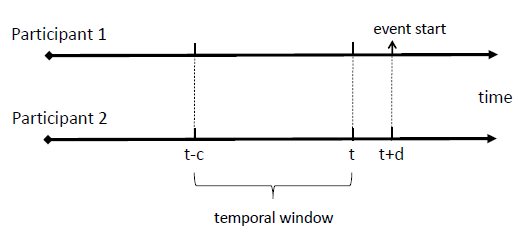

Head-nods and turn-taking both significantly contribute conversational dynamics in dyadic interactions. Timely prediction and use of these events is quite valuable for dialog management systems in human-robot interaction. In this study, we present an audio-visual prediction framework for the head-nod and turntaking events that can also be utilized in real-time

Read more

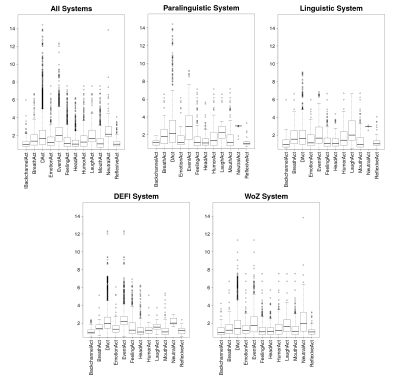

This paper addresses the problem of evaluating engagement of the human participant by combining verbal and nonverbal behaviour along with contextual information. This study will be carried out through four different corpora. Four different systems designed to explore essential and complementary aspects of the JOKER system in terms of paralinguistic/linguistic

Read more

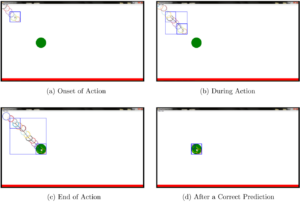

Human eyes exhibit different characteristic patterns during different virtual interaction tasks such as moving a window, scrolling a piece of text, or maximizing an image. Human-computer studies literature contains examples of intelligent systems that can predict user’s task-related intentions and goals based on eye gaze behavior. However, these systems are

Read more

This work advances our understanding of children’s visualization literacy, and aims to improve it with a novel approach for teaching visualization at elementary schools. We first contribute an analysis of data graphics and activities employed in grade K to 4 educational materials, and the results of a survey conducted with

Read more

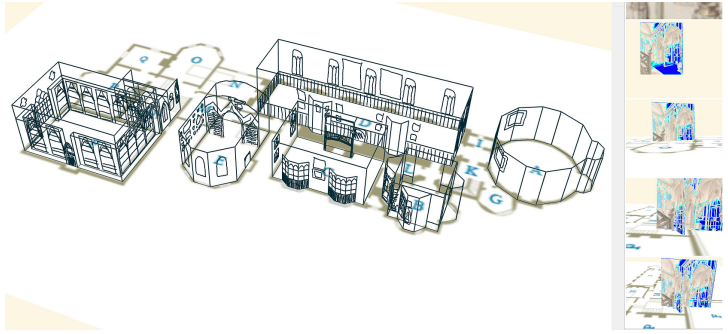

We present a work-in-progress report on a sketch- and image-based software called “CHER-ish” designed to help make sense of the cultural heritage data associated with sites within 3D space. The software is based on the previous work done in the domain of 3D sketching for conceptual architectural design, i.e., the

Read more

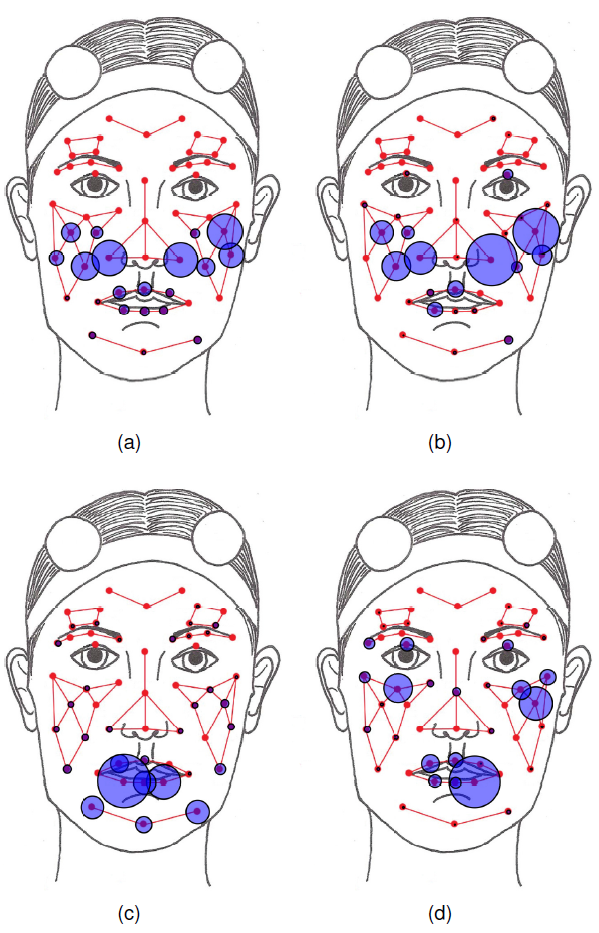

We address the problem of continuous laughter detection over audio-facial input streams obtained from naturalistic dyadic conversations. We first present meticulous annotation of laughters, cross-talks and environmental noise in an audio-facial database with explicit 3D facial mocap data. Using this annotated database, we rigorously investigate the utility of facial information,

Read more

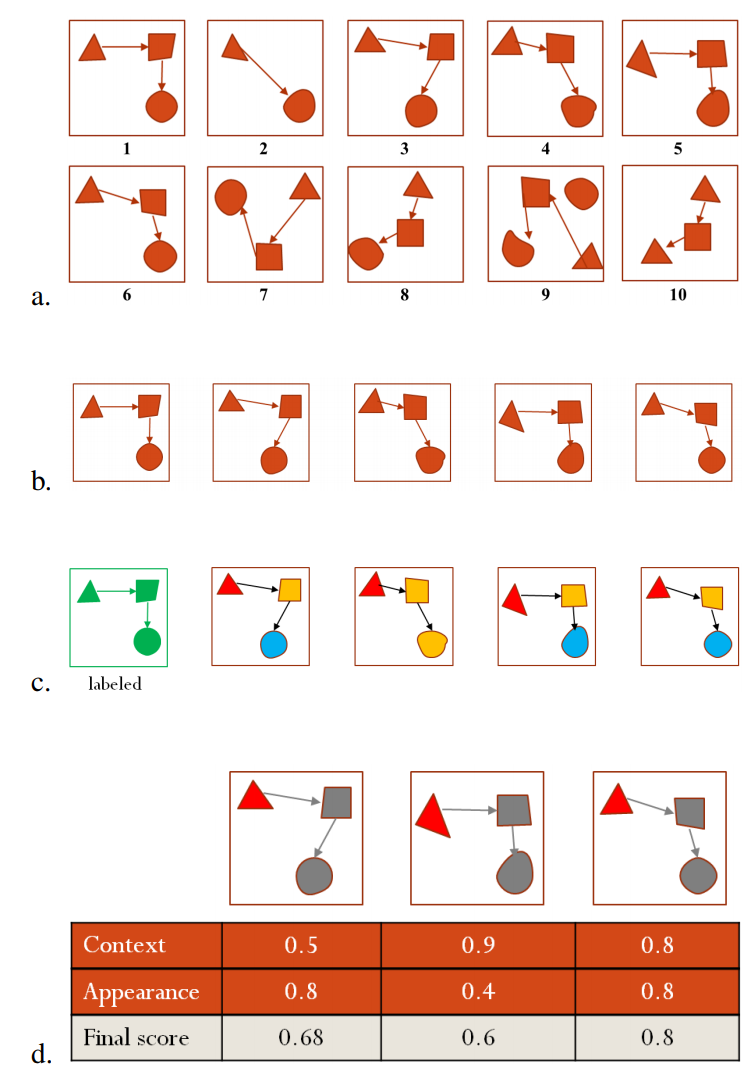

Sketch recognition is the task of converting hand-drawn digital ink into symbolic computer representations. Since the early days of sketch recognition, the bulk of the work in the field focused on building accurate recognition algorithms for specific domains, and well defined data sets. Recognition methods explored so far have been

Read more

From a user interaction perspective, speech and sketching make a good couple for describing motion. Speech allows easy specification of content, events and relationships, while sketching brings inspatial expressiveness. Yet, we have insufficient knowledge of how sketching and speech can be used for motion-based video retrieval, because there are no

Read more

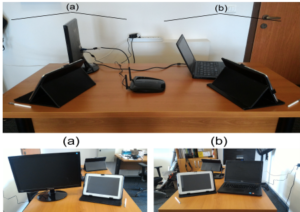

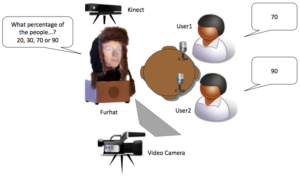

We explore the effect of laughter perception and response in terms of engagement in human-robot interaction. We designed two distinct experiments in which the robot has two modes: laughter responsive and laughter non-responsive. In responsive mode, the robot detects laughter using a multimodal real-time laughter detection module and invokes laughter

Read more