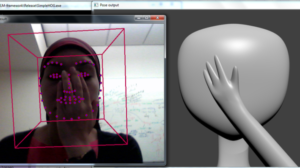

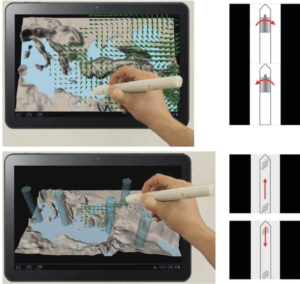

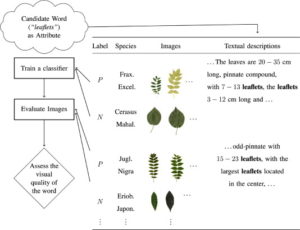

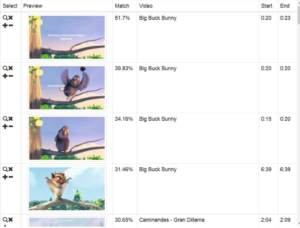

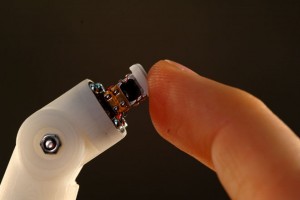

Individuals with Autism Spectrum Conditions (ASC) have marked difficulties using verbal and non-verbal communication for social interaction. The ASC-Inclusion project helps children with ASC by allowing them to learn how emotions can be expressed and recognised via playing games in a virtual world. The platform assists children with ASC to understand and express emotions through facial expressions, tone-of-voice and body gestures. In fact, the platform combines several stateof-the art technologies in one comprehensive virtual world, including analysis of users’ gestures, facial, and vocal expressions using standard microphone and web-cam, training through games, text communication with peers and smart agents, animation, video and audio clips. We present the recent findings and evaluations of such a serious game platform and provide results for the different modalities.

Authors: B. Schuller, E. Marchi, S. Baron-Cohen, A. Lassalle, H. O’Reilly, D. Pigat, P. Robinson, I. Davies, T. Baltrusaitis, M. Mahmoud, O. Golan, S. Fridenson, S. Tal, S. Newman, N. Meir, R. Shillo, A. Camurri, S. P., A. Staglianò, S. Bölte, D. Lundqvist, S. Berggren, A. Baranger, N. Sullings, T. M Sezgin, N. Alyuz, A. Rynkiewicz, K. Ptaszek, K. Ligmann.