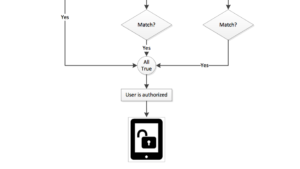

We propose a biometric authentication system for pointer-based systems including, but not limited to, increasingly prominent pen-based mobile devices. To unlock a mobile device equipped with our biometric authentication system, all the user needs to do is manipulate a virtual object presented on the device display. The user can select among a range of familiar manipulation tasks, namely drag, connect, maximize, minimize, and scroll. These simple tasks take around 2 seconds each and do not require any prior education or training [ÇS15]. More importantly, we have discovered that each user has a characteristic way of performing these tasks. Features that express these characteristics are hidden in the user’s accompanying hand-eye coordination, gaze, and pointer behaviors. For this reason, as the user performs any selected task, we collect his/her eye gaze and pointer movement data using an eye gaze tracker and a pointer-based input device (e.g. a pen, stylus, finger, mouse, joystick etc.), respectively. Then, we extract meaningful and distinguishing features from this ultimodal data to summarize the user’s characteristic way of performing the selected task. Finally, we authenticate the user through three layers of security: (1) user must have performed the manipulation task correctly (e.g. by drawing the correct pattern), (2) user’s hand-eye coordination and gaze behaviors while performing this task should confirm with his/her hand-eye coordination and gaze behavior model in the database, and (3) user’s pointer behavior while performing this task should confirm with his/her pointer behavior model in the database.

We propose a biometric authentication system for pointer-based systems including, but not limited to, increasingly prominent pen-based mobile devices. To unlock a mobile device equipped with our biometric authentication system, all the user needs to do is manipulate a virtual object presented on the device display. The user can select among a range of familiar manipulation tasks, namely drag, connect, maximize, minimize, and scroll. These simple tasks take around 2 seconds each and do not require any prior education or training [ÇS15]. More importantly, we have discovered that each user has a characteristic way of performing these tasks. Features that express these characteristics are hidden in the user’s accompanying hand-eye coordination, gaze, and pointer behaviors. For this reason, as the user performs any selected task, we collect his/her eye gaze and pointer movement data using an eye gaze tracker and a pointer-based input device (e.g. a pen, stylus, finger, mouse, joystick etc.), respectively. Then, we extract meaningful and distinguishing features from this ultimodal data to summarize the user’s characteristic way of performing the selected task. Finally, we authenticate the user through three layers of security: (1) user must have performed the manipulation task correctly (e.g. by drawing the correct pattern), (2) user’s hand-eye coordination and gaze behaviors while performing this task should confirm with his/her hand-eye coordination and gaze behavior model in the database, and (3) user’s pointer behavior while performing this task should confirm with his/her pointer behavior model in the database.

Authors: Cagla Cig, T. Metin Sezgin.